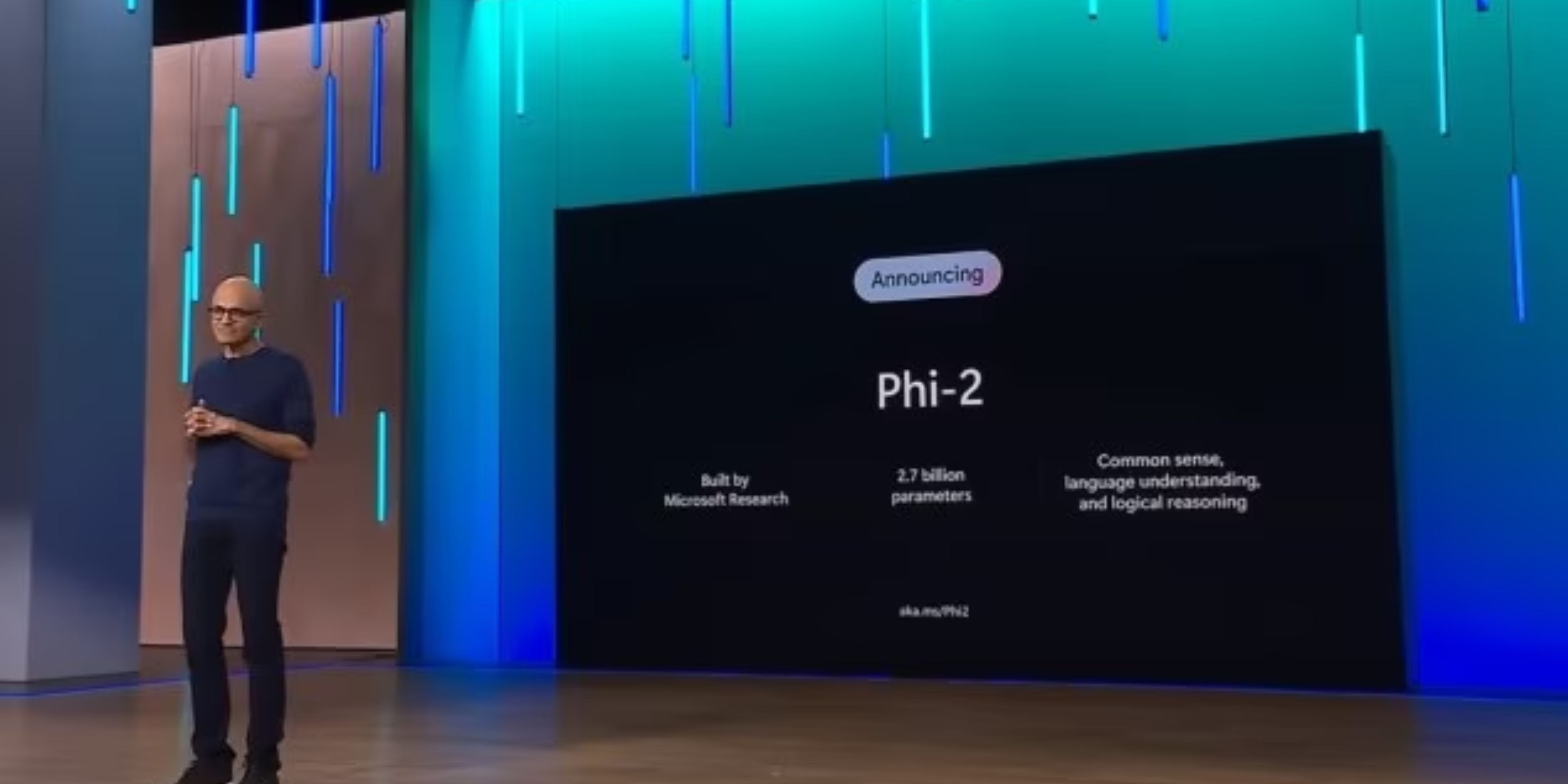

Microsoft has announced it will take decisive action against prompts that could lead its AI-powered image generator to produce violent or sexually explicit content. The move signals the company’s commitment to ethical AI development in light of recent concerns about the potential for misuse within generative AI systems.

The decision follows concerns raised by Microsoft AI engineer Shane Jones, who submitted a letter to the U.S. Federal Trade Commission (FTC) outlining potential dangers of Copilot’s image generation capabilities. Jones highlighted that certain prompts could result in the creation of disturbingly realistic images, including content depicting violence, underage individuals, and potentially harmful stereotypes.

Microsoft’s response

Acknowledging the concerns raised about Copilot’s potential for abuse as Microsoft blocks prompts in Copilot. The company has begun implementing a system to block prompts that could likely produce harmful content. Microsoft will display a message indicating a violation of its ethical AI principles if users enter prompts related to violence, sexual exploitation, or other sensitive topics.

“At Microsoft, we believe in responsible AI development. We are committed to ensuring that our AI-powered tools are used for good and not misused in ways that could potentially cause harm,” said a Microsoft spokesperson. “We will continue to refine our content filters and safety measures to protect users and prevent the creation of harmful imagery.”

Challenges of Generative AI

The incident with Microsoft Copilot underscores the complex ethical dilemmas that come with rapid advancements in generative AI. These sophisticated language models can produce creative text, translate languages, and generate images with incredible realism. However, the technology can also be exploited for creating harmful content like deepfakes, propaganda, and non-consensual images.

AI safety experts have emphasized the need for greater regulation and industry-wide standards to help mitigate the risks associated with generative AI. They caution that AI systems need strong safeguards to prevent them from being weaponized or used to produce disturbing and damaging content.

The road to responsible AI as Microsoft blocks prompts in Copilot

Microsoft’s move to block problematic prompts in Copilot is a step in the right direction. This demonstrates the company’s willingness to take proactive action. However, it also highlights the ongoing challenge of ensuring the ethical and responsible use of AI.

Tech companies across the board will need to continuously refine safety protocols and establish industry standards for generative AI tools. Open communication with both regulators and AI safety experts will be crucial as AI systems become even more powerful.

Was this helpful?

Chhavi Tomar is a dynamic person who works as an Editor for The Writing Paradigm. She studied B.Sc. Physics and is currently doing a B.Ed. She has more than three years of experience in editing, gained through freelance projects. Chhavi is skilled in technology editing and is actively improving her abilities in this field. Her dedication to accuracy and natural talent for technology make her valuable in the changing world of digital content.